Latest link

WHY DO I CONSIDER THE FOLLOWING MY GREATEST ACHIEVEMENT SINCE NEAR DEATH 30 YEARS AGO? I

t is the final nail in the coffin of God, even God-of-the gaps! It shuts up the skeptic who asks, "but who lit the match at big bang"

The

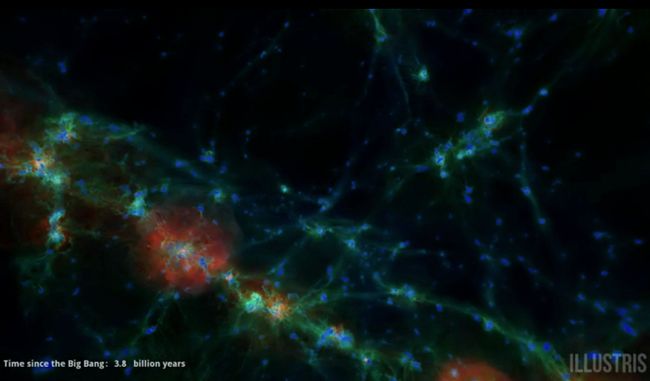

Cyclic Model of Turok was developed based on the three intuitive notions:

• the big bang is not a beginning of time, but rather a transition to an earlier phase of evolution;

• the evolution of the universe is cyclic;

• the key events that shaped the large scale structure of the universe occurred during a phase of slow contraction

before the bang, rather than a period of rapid expansion (inflation) after the bang.

This extends, believing that this edition will live on forever.

Like the master Darvin arguing for Life based on natural selection based evolution!

More than all, only universe-evolution (falsifiable like molecular evolution). My belief is contraction/expansion at big bang the only way to evolve the universe - very slow but time may be infinite! Genius of Darwin was in enunciation of natural selection fundamental to evolution! Contraction/expansion allow natural selection of universes

There are two cosmogonic sets (explained) this article opposes, even while accepting scientific cosmology - any-religion and all extant cosmogonies (science narratives of how universe came to be). Easy is dismissal of all religion-based cosmogonies on the principal of ignorance - pointless to argue with subanimals. Only allowed are those consistent with astronomy extended to cosmogonies.

Superiority to all extant cosmogonies follows from the very simple lack (must explain) of any physics principle without infinite regress! Where do ANY rules of our cosmos come from? Is that process consistent to every rule of cosmology, even those not yet understood! Every religion falls flat on their description of the universe - they are simply analysis of limited observations by excellent brains of the period - with no useful path-finding for scientists of the future! I set a possible disproof and am a path-finder!

Science is

falsifiable , says great philosopher Popper. Next I define truths provably inaccessible to science. If true, these MUST extend science! They are true but provably impossible to test ! In my words, falsifiability is extended by sentences true in limits even not reachable.

COSMOGENIES EXISTING

uniting the very small and very large indirect proof of big bang

impossible physics

NONE OF THE COSMOGENIES HAVE ANY IDEA OF genesis and applicability of the science principles! Mine is different in that rules is the starting point! It assumes infinite numbers of universes in the multiverse, each with origins infinitely earlier. Each universe has infinite number of expansions and contractions, each pair resulting in a subtly different universe in the idea of universe evolution! Each universe may or maynot have intelligent life in it. Our universe has a current epoch with a set of rules conducive to the production of intelligent life - us. It has all the science rules from the evolution - their must be a series of rules that show current rules as either dependent on other basicer rules.

There is a justification of adoption of history of rules. Each derivation is falsifiable from the previous. Not only my thinking provide a basis of work, it provides a path to new theories. Evolution of life on earth provides a wonderful collage of theories with multiple epoch - first Darwinian and in-progress extension to

molecular evolution expressed as

phylogenetics trees. This happens as DNA of any biology is codewords on 4 letters! One can measure distances in many ways, specially by incorporating molecular substitution costs.

This is a basically better (than darwinian) because a missing link need not exist between between near molecules that can be shown near by creating intermediates in a lab and then argue of the climates and earth state of any missing link animals.

Universe evolution is caused not by any God, but the amount of dark energy created between cycles.What happens at contraction? The information of the contracting part is lost through quantum particles and the universe contracts to a minimal size of the maximally dense material possible in space! After that, universe explodes again. New edition has different dark energy and primarily different in space related constants and time constants. All others have geometric relation to free constants that are reset. This is a falsifiable claim! In particular, it appears that Planck length, speed of light, Higgs constant and vacuum energy (dark) are reset.

Critical falsifiability says that all but space principles

evolve as does Planck length and velocity of light. Note that smallest time is light in free space travelling Planck length.

Even Heisenberg uncertainty principle is simple uncertainty in all

Fourier analysis in product of complementary variables! Complementary to position is velocity in position and time signal systems!

Trigonometric functions are BETTER done as

Morlet wavelets in

newer better Quantum theory being written! Superiority over usual QM is that Gabor analysis works even with spatial and time domains unlike Schrodinger equation only spatial (not even relativistic).

Why dark energy?

It is the energy of space. It does not dilute with expanding space! It is simply set at creation cycle event!

Why assume fluctuation?

Whether a universe expand forever or contracts, depends on dark energy! A universe lives on till it reaches a perpetually expanding edition.

Source of science rules?

Universal evolution. All needed for current epoch of universe is one series leading to us, or alternately limiting to no rules at all.

Each universe cycle must be very specific?

Not really! Whether a UE series makes sense or not depends on every term being different in dark energy, for all that happens in each cycle is calibration of the space.

What drives thinking so?

Error-correcting codes for quantum computers are already in quantum space! Quantum space is just space with quantum rules. Matter is just Higgs Boson-trapped photons! Real vacuum is just space alive with quantum particles with fleeting matter and antagonists. This is due to creation of space at big bang point in every cycle. It need not be same in every universe iteration.

What is this cosmogony based on?

A discrete space-time leads to string theory or loop quantum gravity LQG.

A big bounce scenario is natural in LQG.

Bounce is the only Universe evolution method for science rules! It is the

No graviton or basic dark matter has been found.

Verlinde imagines correctly no such thing as dark-matter. His string theory thinking modifies galactic rotation curves correctly and Einstein bending of light around galaxies! I (minority) think Verlinde is right.

Gravity is not a quantum force, hence no graviton (never found)! It is not a product of big bang.

Neither time or space is fundamental (hence follows space-time as per Einstein) and follow from entropic gravity.

There is no dark energy either, it is vacuum quantum energy. Galaxies far away accelate away faster due to QVE.

Why make the massive claim of new better cosmogony?

Only existing that explains science rules by universal evolution, eliminates dark energy and dark matter through Dr. Verlinde and explains quantum vacuum with

natural quantum error-correction codes .

Last ref indicates why now is the earliest above could be written!

What is the falsifiability here?

Only universe evolutions which are different in space terms are correct. All other constants must be expressible in terms of geometry and varying space constants. Higgs value and vacuum strength are likely from space evolution. Any amount of universal fine tuning is likely.

Where are they?

It is sheer vanity to consider humans as only intelligent species. It is also unbreakable physics that makes a no go theorem of light speed. Wherever they are, they stay far away. NO travel faster than light speed is possible - all mass things require infinite mass at light speed. Any normal information transfer is impossible by a no go theorem - intuition is simple even though entanglement is faster than light, two entangled objects share a state, but ANY unnatural effort at changing the state of one kills the entanglement!

Particles

It ia natural for us to consider everything space-like since it evolves. Thus idea of particles as creation time back holes is natural.

Studied in some depth here. Only some are stable, others are created in very energetic collisions ans rapidly evaporate into photons. Black holes are distinct based on when created, stellar or creation time.

Using encryption to communicate faster than light

Impractical but doable.